Hi, I am Jian. Currently, I work at Monash University as a PhD candidate, under the supervision of Prof. Aldeida Aleti, Prof. Chunyang Chen, and Prof. Hongyu Zhang.

I was working as a PhD candidate (research assistant) at University of Zurich, supervised by Prof. Harald C. Gall. Before that, I obtained my master’s degree in machine learning, at KTH Royal Institute of Technology, supervised by Prof. Martin Monperrus. Further, I completed the bachelor’s study in computer science (the elite class), at Shandong University, supervised by Prof. Jun Ma.

My research investigates the interplay between software engineering and machine learning, focusing on how engineering principles may guide targeted repairs to language models (LMs), namely LM Repair. Meanwhile, I work on the underlying mechanism, which is the inherit semantic property of LMs, termed LM Semantics. For academic inquiries, please contact me via email.

🔥 News

- 2025.09: 🚁 I started a research intern at Huawei Hong Kong Research Center …

- 2024.10: 🚁 I started a visiting trip to NLP Lab @ Tsinghua University …

- 2024.01: ⭐ Proudly share our series of work on LM Semantics: Vocabulary-Defined Semantics, Transition on Semantics, Semantic-based Knowledge Transfer, …

- 2023.03: ⭐ Proudly share our series of work on LM Repair: Neuron-Targeted Repair, Token-Aware Repair, Semantic Optimization for Repair, …

💻 Featured Work

Semantic-based Optimization for Repairing LLMs: Case Study on Code Generation

Jian Gu, Aldeida Aleti, Chunyang Chen, Hongyu Zhang

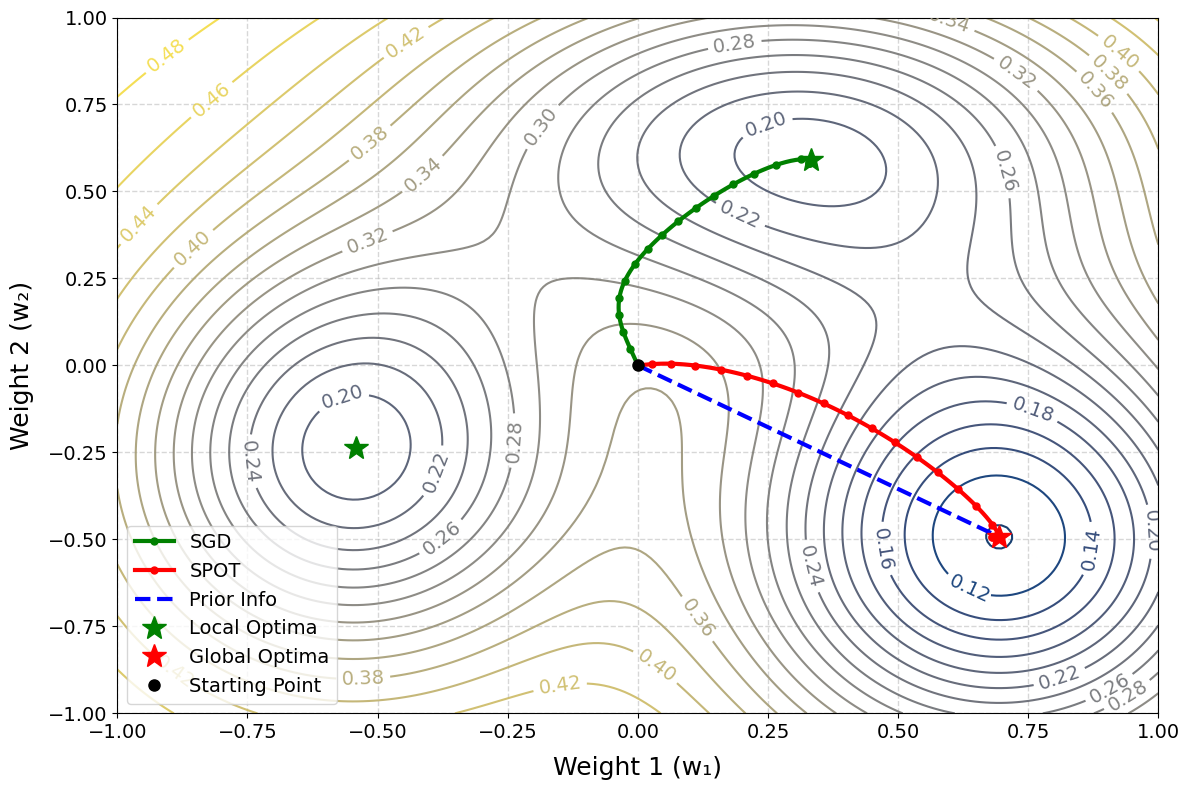

STAR is a novel semantic-based optimization approach for LM repair that efficiently locates and patches buggy neurons using statistical insights and analytical formulas, outperforming prior methods in effectiveness, efficiency, and minimizing side effects.

Semantic-Aware Layer-Freezing for Computation-Efficient Fine-Tuning of LMs

Jian Gu, Aldeida Aleti, Chunyang Chen, Hongyu Zhang

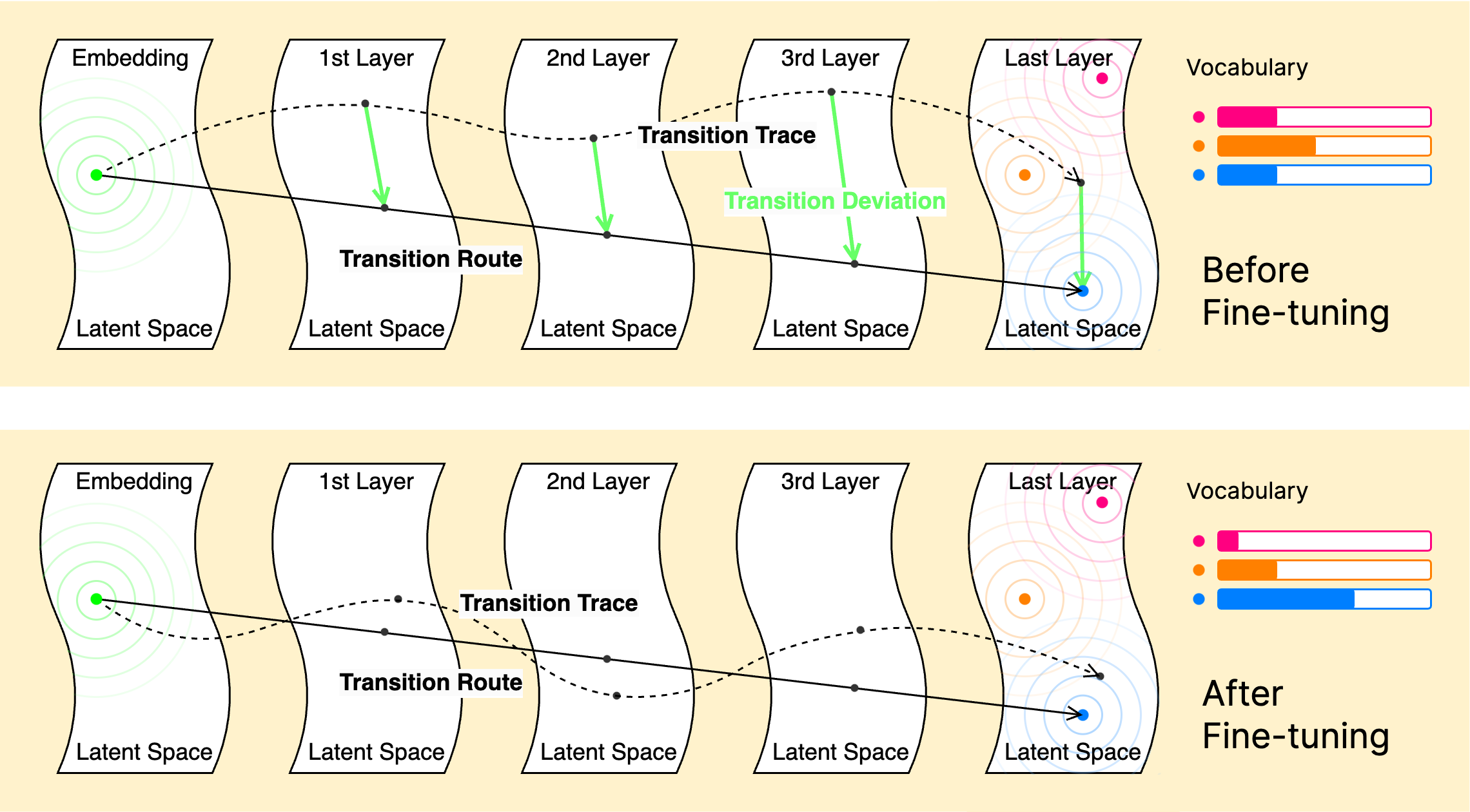

Our semantic-based layer freezing approach improves the efficiency of language model finetuning by determining where to finetune, outperforming existing methods through a detailed semantic analysis of the model’s inference process.

Vocabulary-Defined Semantics: Latent Space Clustering for Beyond-Context Learning

Jian Gu, Aldeida Aleti, Chunyang Chen, Hongyu Zhang

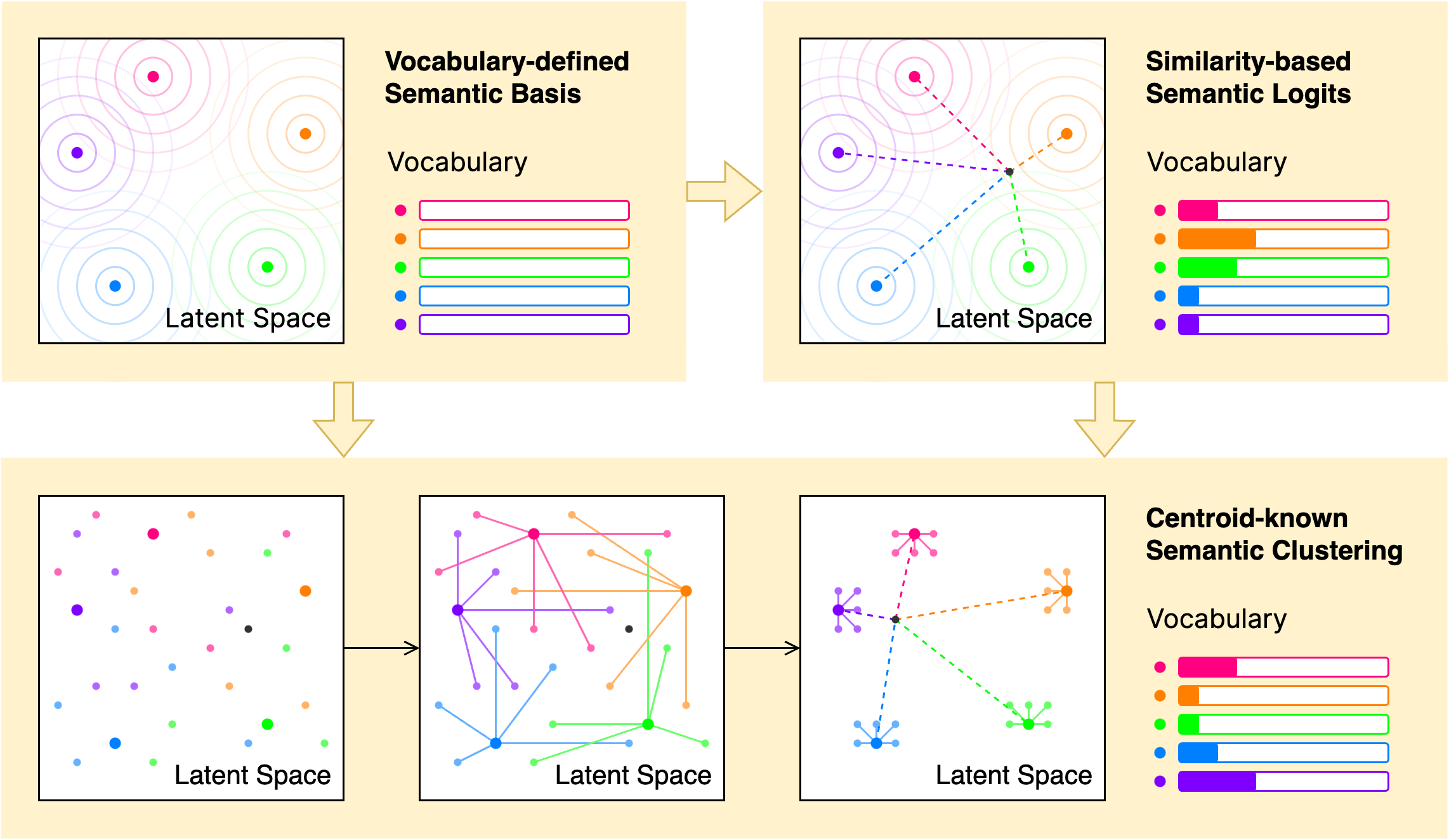

We propose “vocabulary-defined semantics” to reformulate in-context learning as a clustering problem, aligning semantic properties of language models with downstream data, outperforming state-of-the-art methods in effectiveness, efficiency and robustness.

Neuron Patching: Semantic-based Neuron-level LM Repair for Code Generation

Jian Gu, Aldeida Aleti, Chunyang Chen, Hongyu Zhang

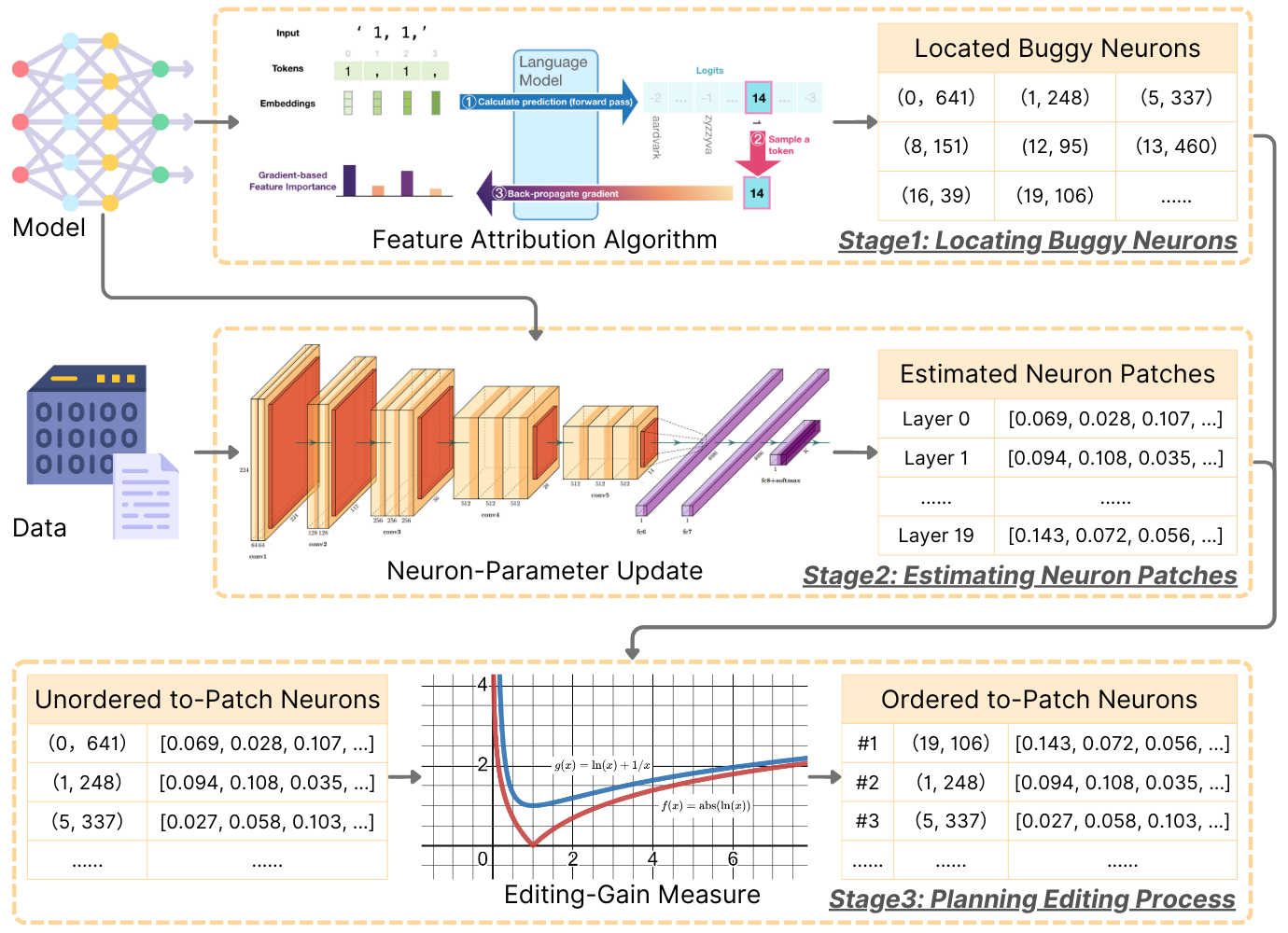

MINT is an efficient and reliable technique for repairing large language models in software engineering. It can successfully solve model failures by patching merely 1 or 2 neurons, outperforming state-of-the-art methods in coding tasks.

Towards Top-Down Automated Development in Limited Scopes: A Neuro-Symbolic Framework from Expressibles to Executables

Jian Gu, Harald C. Gall

Deep code generation integrates neural models into software engineering for generating code but requires enhancements for project-level tasks, suggesting a taxonomy on code data and introducing a semantic pyramid framework to improve software development processes.

📝 Selected Papers

Software Engineering for Machine Learning (SE4AI)

ArXivBeyond Neural Incompatibility: Easing Cross-Scale Knowledge Transfer in Large Language Models through Latent Semantic Alignment

Jian Gu, Aldeida Aleti, Chunyang Chen, Hongyu ZhangICSE'26Semantic-based Optimization for Repairing LLMs: Case Study on Code Generation

Jian Gu, Aldeida Aleti, Chunyang Chen, Hongyu ZhangACL'25Semantic-Aware Layer-Freezing for Computation-Efficient Fine-Tuning of LMs

Jian Gu, Aldeida Aleti, Chunyang Chen, Hongyu ZhangArXivVocabulary-Defined Semantics: Latent Space Clustering for Beyond-Context Learning

Jian Gu, Aldeida Aleti, Chunyang Chen, Hongyu ZhangArXivFocus-aware Neurons: Robust LM Repair leveraging Selective Attention

Jian Gu, Aldeida Aleti, Chunyang Chen, Hongyu ZhangArXivNeuron Patching: Semantic-based Neuron-level LM Repair for Code Generation

Jian Gu, Aldeida Aleti, Chunyang Chen, Hongyu Zhang

Machine Learning for Software Engineering (AI4SE)

FSE'23Towards Top-Down Automated Development in Limited Scopes: A Neuro-Symbolic Framework from Expressibles to Executables

Jian Gu, Harald C. GallSANER'22Assemble Foundation Models for Automatic Code Summarization

Jian Gu, Pasquale Salza, Harald C. GallICSME'21Multimodal Representation for Neural Code Search

Jian Gu, Zimin Chen, Martin MonperrusTSE 2022On the Effectiveness of Transfer Learning for Code Search

Pasquale Salza, Christoph Schwizer, Jian Gu, Harald C. GallTSE 2021Automated Classification of Overfitting Patches with Statically Extracted Code Features

He Ye, Jian Gu, Matias Martinez, Thomas Durieux, Martin Monperrus

“Machine intelligence is the last invention that humanity will ever need to make. Machines will then be better at inventing than we are, and they’ll be doing so on digital timescales.” – Nick Bostrom